- Phonic Tonic

- #66 - Phonic Tonic - Building a Prototype (2⁄3)

- Only the Paranoid Survive

- Nuance Transcription Engine

- Watson Speech to Text

- Cloud Speech-to-Text

- Amazon Transcribe

- Sheet - Phonic Tonic Financials

- https://www.google.com/search?q=audio+to+text+transcription

- Rev

- Trint

- Outsourced Medical Transcription

- Don’t Call Yourself A Programmer, And Other Career Advice

- Salary Negotiation: Make More Money, Be More Valued

- Salary Negotiation and Job Hunting for Developers

- JSON transcript (1733270083763043607.txt.zip)

I just wanted to take a moment and thank you for supporting the site with your subscription. You are making this type of content possible and I really appreciate your support.

Alright, let’s dive in.

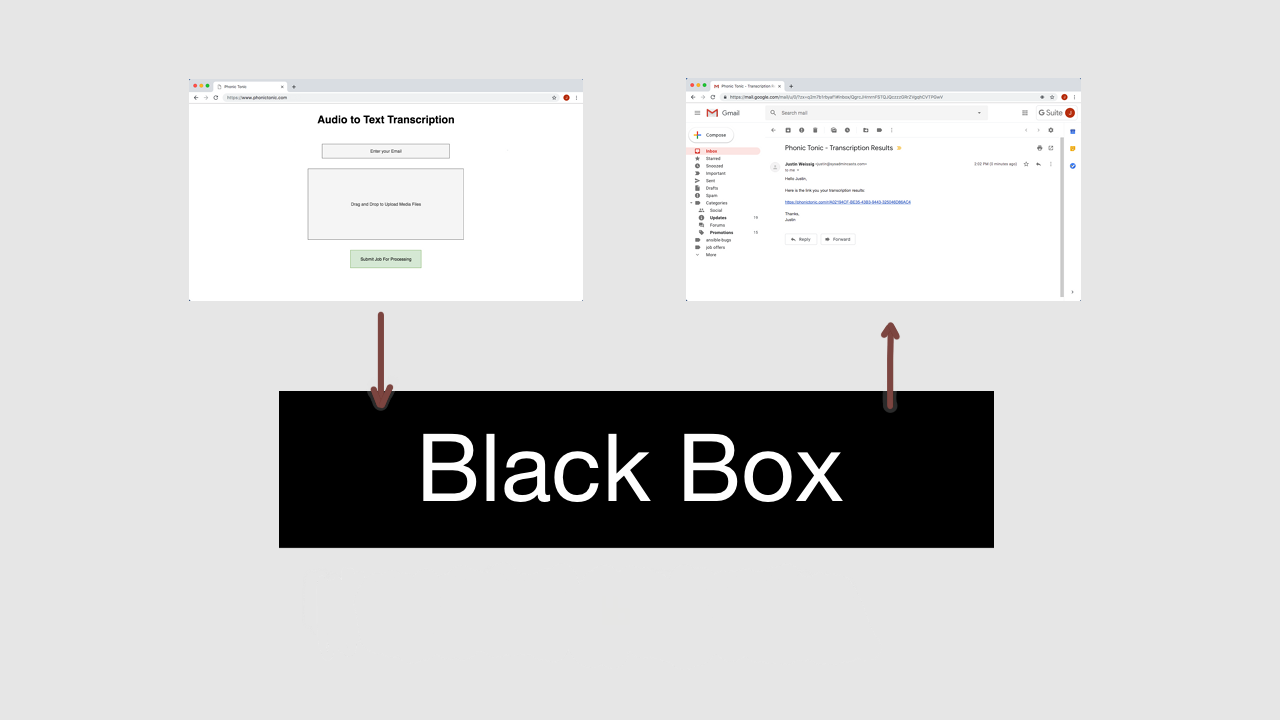

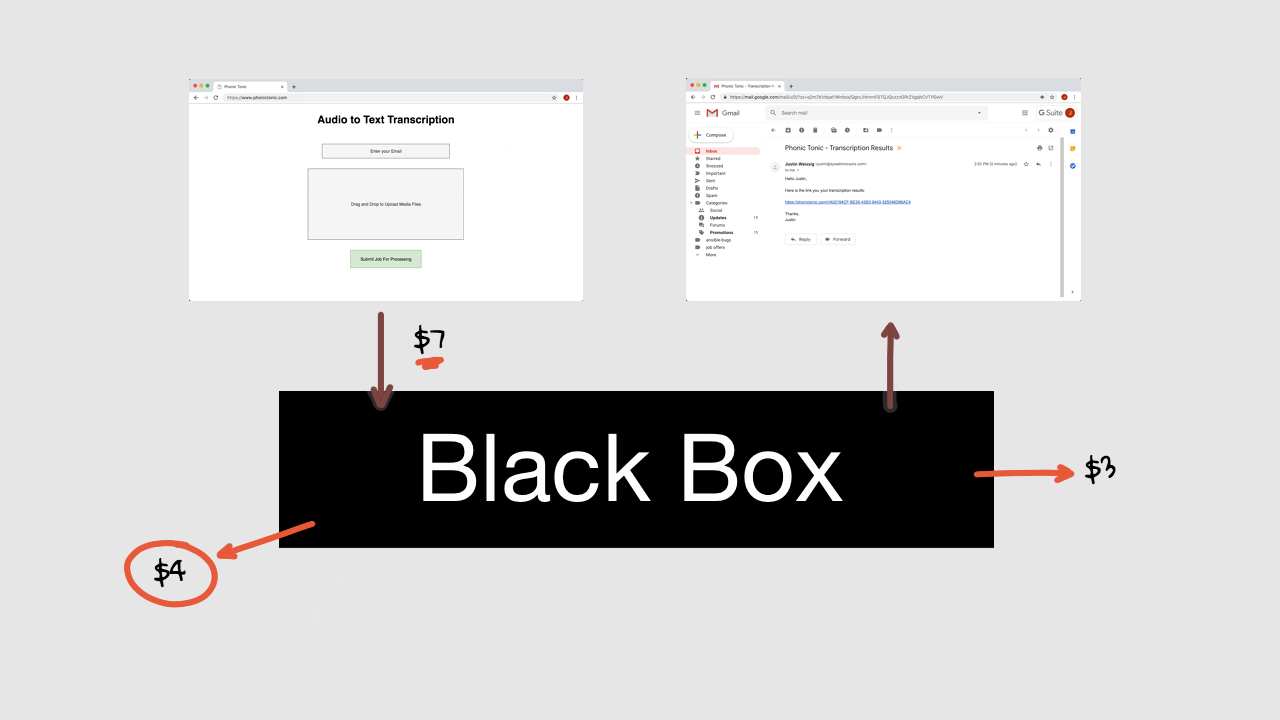

I thought it might be fun to mix things up a little, by creating an episode series, where we build an audio to text transcription service. The product idea is pretty simple, you upload your audio files to this website as an input, then on the backend infrastructure here in this black box, we will process and transcribe those uploaded audio files, and then a short time later send you an email with the transcribed text files as an output.

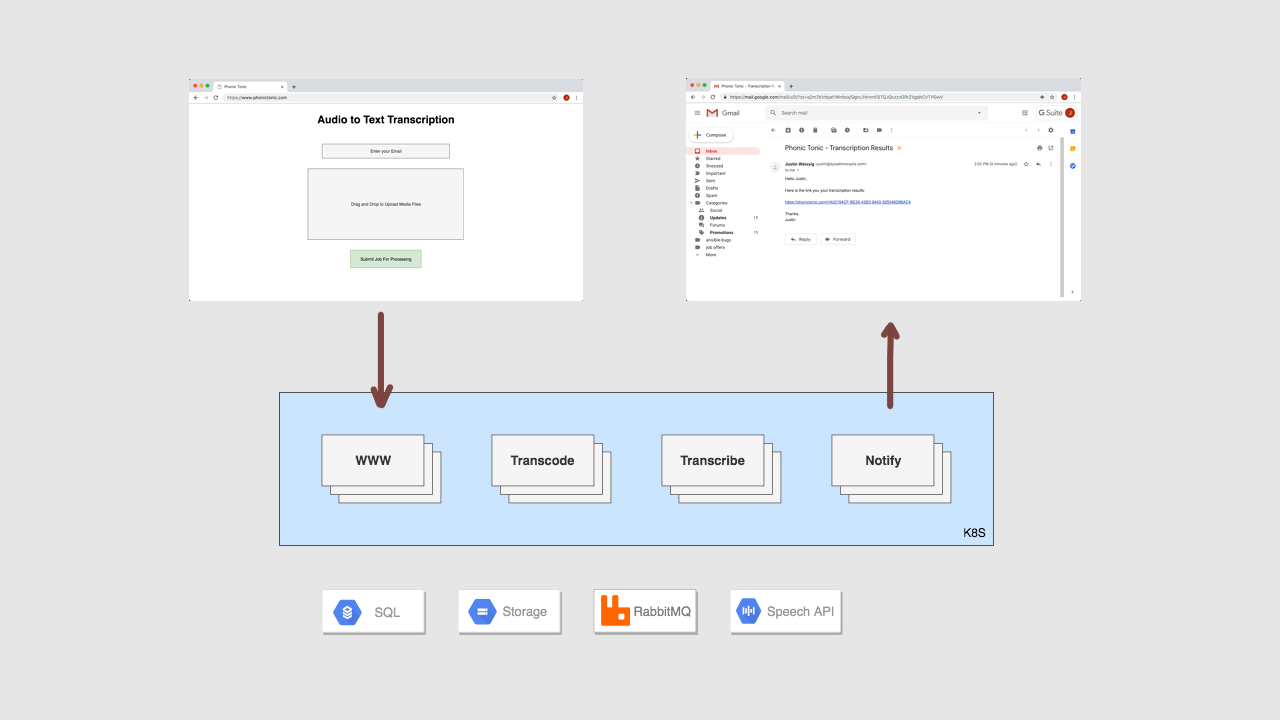

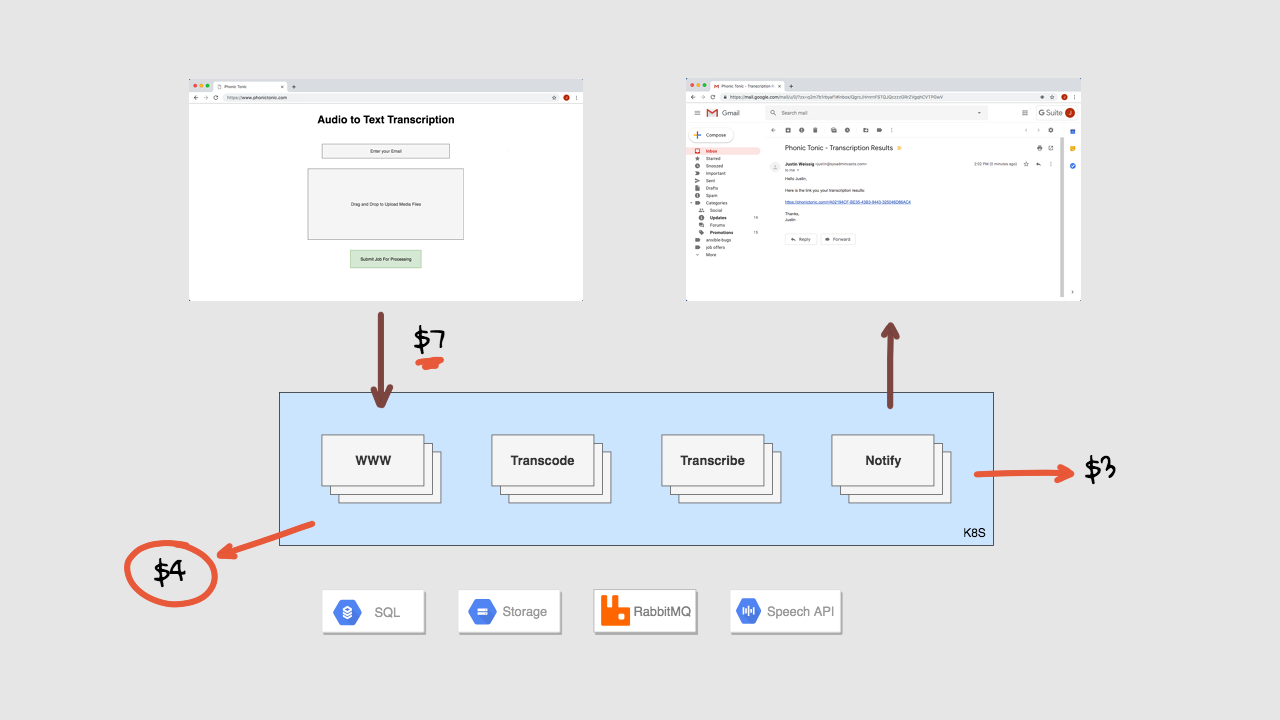

The goal of this episode series is to architect and build out what’s happening behind the scenes here, on the backend in this black box, that enables this simple customer workflow. By the time we are done, we should have a simple application that delivers state of the art automated audio to text transcription with high accuracy and lightning speed. As you can see, this will involve a bunch of different containerized services, a database, blob storage, a worker queue, and lots of remote transcription API calls. My thinking here, is that I want to replicate what a real company might be dealing with, and hopefully learns lots as we work through this. I am positive we will make mistakes and not get everything right on our first try, but that it actually a good thing, as we can build on these idea in future episodes.

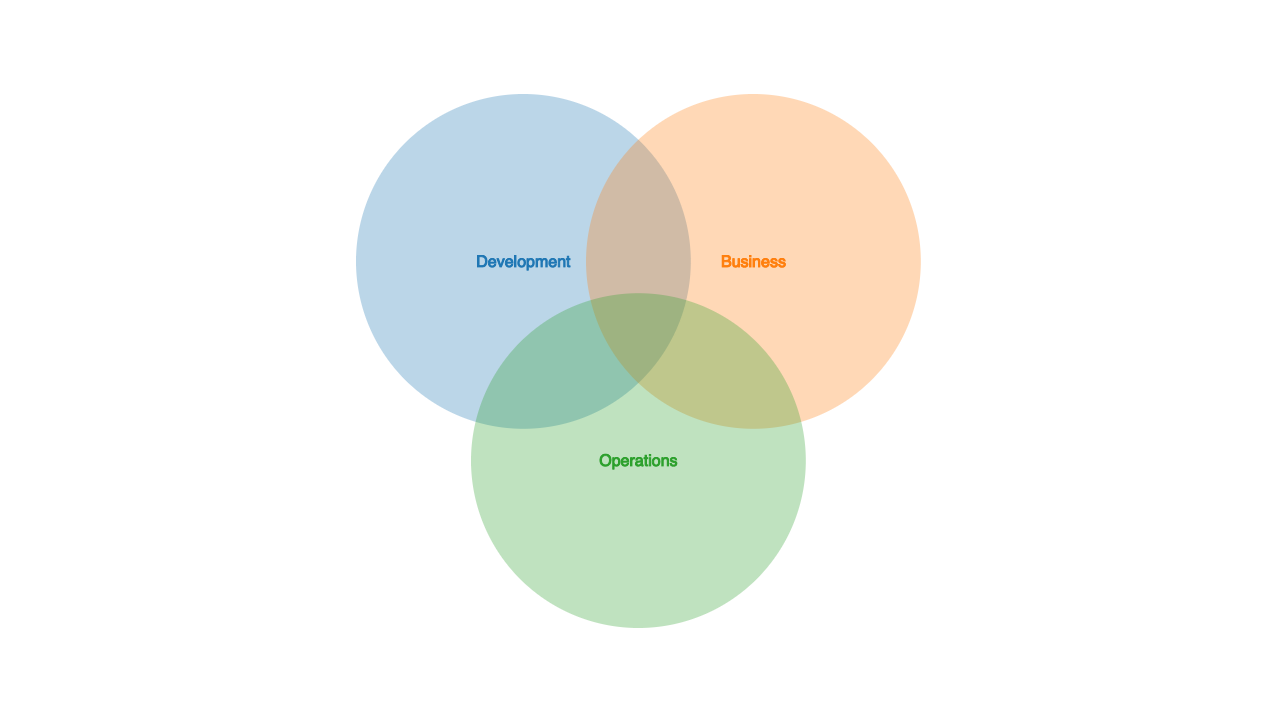

For example, I could see us looking at lots of topics around logging, monitoring, cost modeling, and probably infrastructure optimization, just to name a few. Additionally, when working on these types of things, there are often pros and cons to each path you choose, and we can sort of chat about that as we work through this. It should be pretty fun, as it will be mix of business ideas, development work, and lots of backend operations stuff. Almost like a Venn Diagram as we will be briefly touching many topics in these areas. I find it useful to work on these types of projects from time to time as it gives you a point of reference for what other groups in your company likely care about. Sort of, puts yourself in their shoes.

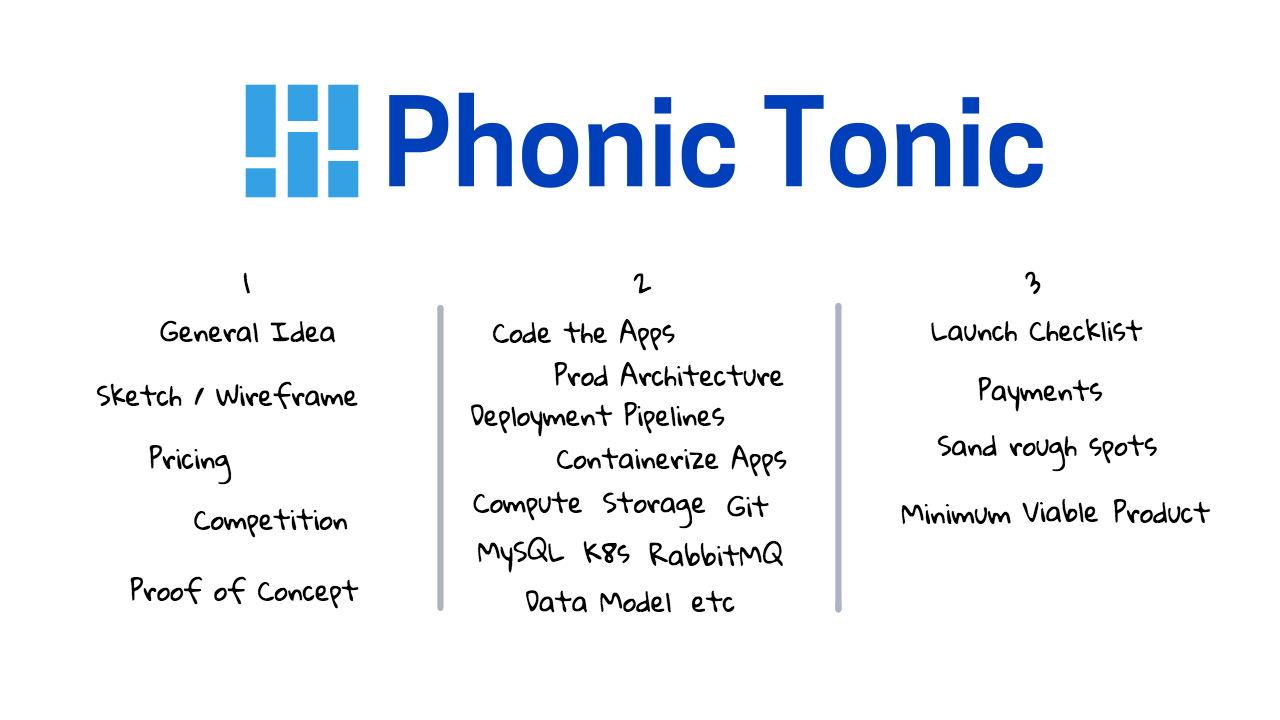

Alright, so I have broken the episode series into three parts. I picked the name Phonic Tonic, since it sounds sort of funny, rhymes, and the word Phonic is related to the sound of speech, and we are going to be adding some ML tonic into the mix. This is mostly just BS though, I was searching around for names that were not already taken, and this is what I came up with after looking through a Thesaurus.

In the first episode, which you are watching now, is where we will chat about the general idea, walking through the workflow step-by-step, sketch things out, and chat about the backend workflow. We will also chat about the competitive landscape and product pricing, then we will look at a demo where we actually transcribe something at the command line via a remote API. This is sort of the core idea that we are going to build everything on top of.

In the second episode, we will design, code, and containerize our website, media transcoder, audio transcriber, and the email notifier services. There will also be a bit where we chat about what a data model looks like for this thing (and how I typically approach that). We will also wire all this up to the backend database, storage, and worker queue systems (mostly sitting on kubernetes). This will be super technical and fast paced. You will also get all the code as we work through this together.

Finally, in the third and final episode, we will round things out by checking out what a Product Launch Checklist looks like, we will chat about payment processing options, and mostly just trying to sand off any rough spots for our Minimum Viable Product. The end result here, should be to launch something that regular people out on the internet can actually use for quickly transcribing their audio files to text, on a self-serve basis.

So, why pick this idea and create a website like this? What is so important about a audio to text transcription service? Well, this stems from sort of a trend that is developing. I think there is an opening for a dead simple fully-automated transcription service, that is self-serve, super cheap, fast, and get you 95% of the way there in terms of accuracy. Sure, the end user will have to put in some work to clean it up the transcription but they will save tons of money.

Let me explain, so I read this book a few years back about the founding of Intel and how they noticed something that no one else did. Basically, that integrated circuits were going to be huge and they need to put everything they had into it. I think there is a similar mini-trend happening today when it comes to the latest advancements in machine learning in terms of speech recognition. I highly recommend this book by the way. It used to be the case, that you would have to spend mega bucks and use custom trained Speech Recognition Engine by a company like Nuance. But, just in the past 3-4 years, IBM released a general transcription model that works across lots of speakers, and it’s pay as you go. Then Google released one 2 years ago. Finally, AWS released theirs last year. This is what’s powering all the Alexa and Google Assistant devices out there. So, these tools are becoming much more advanced by the day as the folks at these companies are gathering more data and refining things. They are only going to get better, the price is going to drop further, and it will likely get much quicker as the algorithms get tuned.

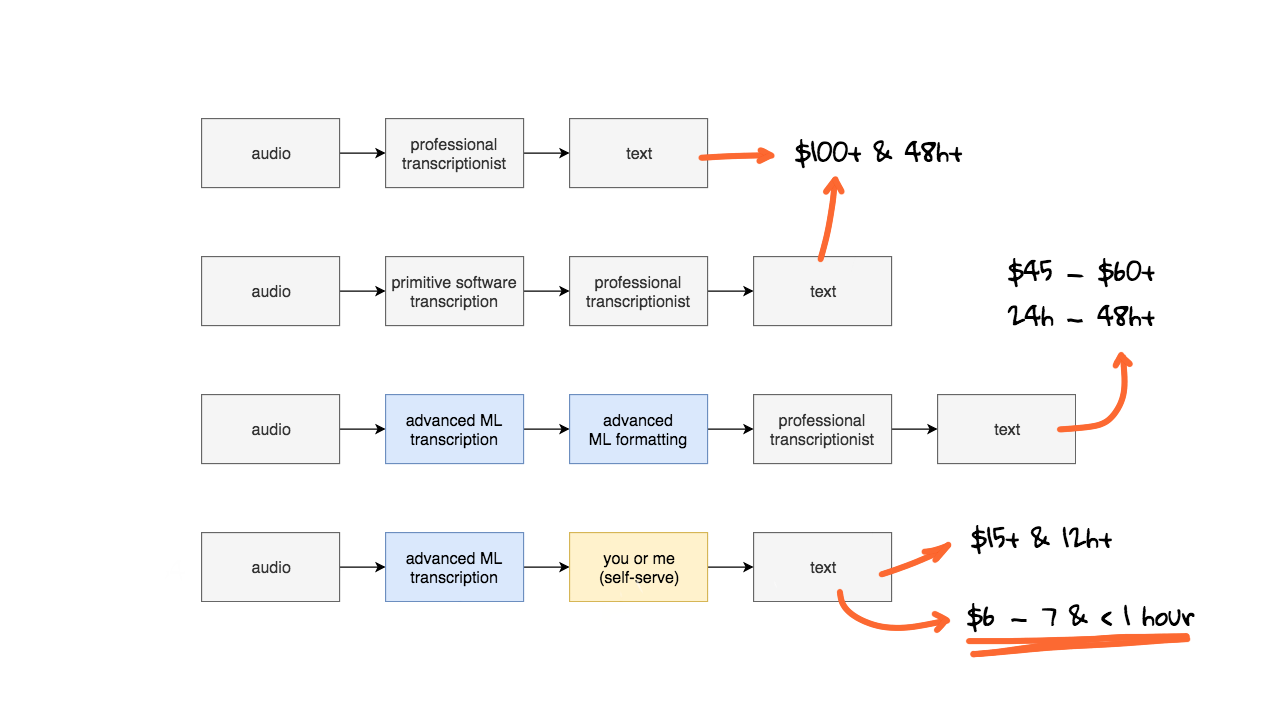

Here’s how I see things. By, the way, I am not an expert here at all, just someone who has done some research and build a few prototypes. Say, 15 years ago, if you wanted to get something transcribed, say closed captioning for movies, TV shows, medical records, etc, you would pay big bucks to professional transcribers. The next iteration, was that software came along to assist professional transcribers. This was software like Dragon Dictate and other custom stuff from Nuance to help automate much of the manual work. But, this was cumbersome and the accuracy was not all that great.

Well, just in the past couple of years, we are getting way more advanced machine learning models that are totally generalised and work across lots of speakers. Accuracy is shooting through the roof too. This software used to have massive problems with speakers with accents and things like that. There is also much more advanced tooling around formatting transcripts for specific use-cases, things like medical templates, punctuation, legal use-cases, and lots of other stuff. This is increasing professional transcribers productivity, but also causing loss of work on the high-end, since they are essentially proof reading what the computer is doing.

With in the past 2-3, developers you can sign up to IBM Watson, Google Speech-to-text, or AWS Translate, and get access to these really advanced tools for fractions of a penny per minute.

Let me add some numbers. For the first two use-cases here, it is not uncommon to pay $100 plus bucks and hour, to a professional transcriptionist, with a turnaround time of at least a few days. If you wanted a rush job, think about doubling this rate, have multiple speakers, add more money, does someone have an accent, add more money. You get the idea.

Now, lots of the existing transcription companies are integrating these advanced Machine Learning models as sort of a preprocessing step before a transcriptionist will see it. So, they can process many more jobs, but the price per jobs is also going way down. Turnaround time is also still pretty high as they can now accept more high priority jobs on the front end.

Right now, you are starting to see startups leveraging these new APIs from IBM, Google, and AWS, to further drop the price and speed things up. I should point out these APIs are only accessible programmatically. So, the missing piece here is for someone to write a super simple way to process jobs through them. Well, this is what I wanted to focus on. I suspect these prices can drop a lot more and the turnaround can be dropped significantly for most of this. I had sort of a startup idea around this and that’s where the idea came from. In that, these larger companies are going through a massive 10X changes both in price drops and turnaround times, given the latest advancements in these cloud transcription offerings. That is why I wanted to build this.

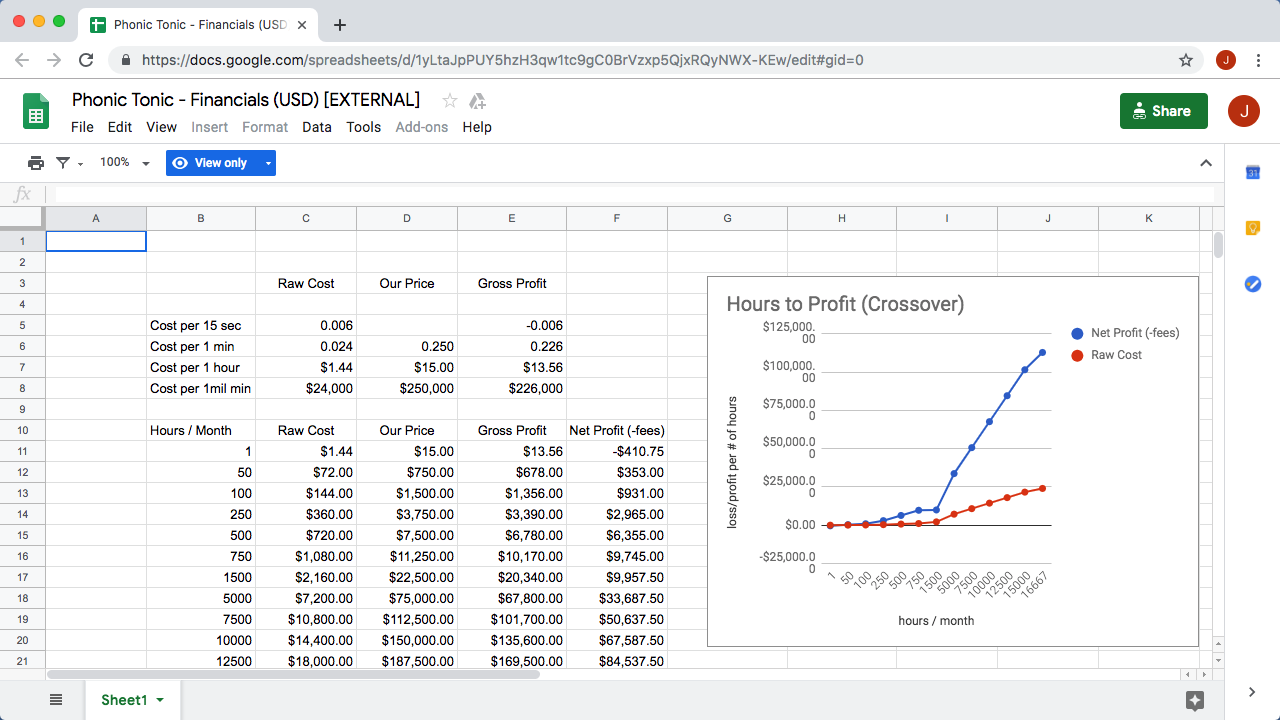

To help explain this, I put together a simple spreadsheet that runs through the numbers. This is super high-level but will likely help paint the picture a little better of what these new machine learning speech tools are enabling.

So, the first column here shows the raw cost for using the IBM, Google, and AWS Transcribe services. The per minute cost, is roughly about 0.024 cents, that works out to about $1.44 per hour. Now, most of these cloud based transcription services only accept transcription jobs in a specific audio file format. So, we need to run some infrastructure to accept the jobs, transcode or convert uploaded files into a format that works, submit and babysit the jobs, and format the results into something human readable. This is where our price comes in. So, you can play around with these numbers here, and sort of figure out how much you would need to charge for it to be worth it, as you’ll need to pay infrastructure costs, wages, fraud, taxes, etc. This is pretty much like, rough napkin math here, but it sort to helps paint a picture of what is going on. So, we’d need to process around 5000 to 7500 hours of audio per month to make pretty decent money. Granted, this only works if you are one or two person company. But, you really don’t need much since the operational overhead of running this type of company is pretty low.

So, we need to charge around $6 bucks an hour to cover our costs. There is totally room for smaller companies and startups to start nibbling away at the current market share. If we bump the price up a little, lets say 0.25 cents per minutes, that gets up $15 bucks per hour. This is what I found a few startups charging. Obviously we would need to work on the numbers more and start profiling workloads to figure out the exact cost per job, in terms of compute, storage, and bandwidth, but from what I can see this mostly makes sense.

I know this is pretty far out from my usual topics. But honestly, I think this is a useful topic to discuss as most startups and companies operating in the cloud will look at this type of stuff. I have often been asked to budget workloads and give cost estimates. This is actually a really critical skill. So, it is super useful to be thinking about this stuff. I have added this spreadsheet in the episode notes below if you want to have a look. So, say we are charging something between 6 and 15 bucks per hour of audio, lets flip back to that technology comparison for a minute. We are still pretty much state of the art for price, automated accuracy, and job turnaround time. This is all assuming the customer would want to spend a few minutes on their own proof reading and doing a little formatting. But, I suspect there are tons of people willing to do that.

This opens up lots of new customer use-cases too since transcription is now so cheap. So, they might decide to do things they otherwise would not have. We could probably even drive the cost per hour down even more, as we learn the business, and automate things. So, this is my very long winded explanation of where the idea came from. I was mainly thinking about the impact of these new machine learning tools on existing companies.

But, there is a few big problems with this idea though. We need to get lots of new customers in the door each month and that could be a costly and complex problem. Also, what happens when these established companies create a budget option and offer something around the same price as us? This is why I don’t really want to go full time on the idea. In that, it seems pretty risky, as you are going to face tons of new competition here. So, I thought it would be cool to use this idea as a teaching tool, but also launch this for real at the same time. So, we can sort of learn about this together. Please let me know if you have an ideas around this too. It would be nice to tap into the hive mind!

So, lets chat about competitors for a minute. If you search “audio to text transcription” you will instantly see lots of offerings. Companies offering transcription options ranging from $0.20 cents per minute to $1 per minute plus. We already looked at how we could likely offer something in the $0.10 cents per minute range. The big one here seems to be rev.com, where they will have a professional review the transcript before sending it over, which is pretty cool but costly. The next one, is trint.com, and they appear to be closer to our idea, of just offering an automated tool to get your a transcription job done quick and cheap, where you do all the final editing yourself. They also seem to have a really polished tool. Finally, you have the mega corps that are doing most of the medical transcription stuff. I think this is actually a really good idea. You likely want a human reviewing medical terminology and medication dosages and things like that. They don’t even list the prices so you can guess with is mega bucks too. This is not the target market we are after. I am more thinking about the student that wants to transcribe a lecture, or podcasters, or lots of office folks that want to transcribe meeting notes, things like that.

Alright, so that is my brain dump on what I am seeing, and what I have been thinking about in terms of this idea. So, what does a product, and backend architecture look like for actually processing these types of transcription jobs?

Well, I sort of envision a website where you just enter your email, drag and drop you files, then click submit. We probe all the files and calculate a cost and ask the user if they want to pay. If they say yes, we process the jobs on the backend, and they get an email link to the results shortly after. Why the delay here? Well, most of the cloud based transcription services take a few minutes to process the jobs, especially if they are really long audio files. So, we need to accept the customers jobs, process it, babysit it, and eventually return the results.

Lets walk through how this would actually work step-by-step. So, a user shows up at our website hitting our main web service, they enter their email, upload their files, and pay. We are flushing all this user data into a SQL database for order tracking, uploading the audio files to cloud storage, and then we submit a job into a queue for processing. Episode #59, on RabbitMQ might be a good refresher on worker queues here, to add some background context.

I mentioned before that these cloud transcription services are super picky about what formats of audio they accept. So, say for example a lawyer records a video deposition and wants to get a text transcript. Well, we will likely need to strip the audio track out of that video, and convert that audio into a format the transcription service accepts. So, the next worker in the queue, is this transcode service that fetches the audio files from storage, converts them into acceptable formats for us, then save them back to cloud storage using a new name.

Next, we submit a task back into the queue for this audio file to be transcribed. The transcription service, grabs the task out of the queue, and submits a jobs into the cloud providers transcription API, along with a config and link to where the preprocessed media is sitting in cloud storage. Then, the worker waits for this transcription job to complete, it might take seconds or many minutes. Finally, it completes and we save the results back to cloud storage, and update the database with the final status.

Finally, we submit another task into our task worker queue for the notify worker service, to let the customer know their job is finally complete.

So, that is the end-to-end workflow for what we will be building. I wanted to use this decoupled task queue worker model because we will have lots of background things running in parallel that with take a variable amount of time to complete. Since each step is fairly isolated this gives us a little more operation flexibility too. This architecture also scales really well. We will cover this heavily in the next episode and walk through some pros and cons as we do it. There is not anything too complex on the backend here but ultimately the customer doesn’t really care about any of this. From their perspective, this is pretty much a black box. So, we need to make this as simple and easy to use as possible, so they users want to use our service.

This gets us back to that Venn Diagram from earlier. Just like your customers, I find that many folks inside your company will also think of what you are doing as a black box. They don’t care we are using containers or not, kubernetes or not, using worker queue or not, etc. They just want it to work, be reliable, and get customers through the door to give the company money. It took me many years to learn this fact. The company really only cares if this positively or negatively impacts the bottom line. This little piece of insight can be extremely powerful though.

Take this example. Say, the customer pays you $7 bucks, and $3 automatically goes out of your black box and into other people’s hands, like the cloud provider, payment processor, your wages, etc. Your company only sees $4 of that. Thinking from a management, or executive perspective, they are always trying to get better returns. They might see charging the customer more as an option, they might see outsourcing pieces of your black box as options too, etc. All in an effort increase what is coming into the company.

This isn’t really related to the episode, but I thought of it while working through this, so wanted to mention a few useful ideas for getting good performance reviews. Folks in the operations space have a unique perspective as we totally understand what’s happening behind the scenes. This is where you can really shine on your performance reviews if you look at things from a management or executive perspective. How can you increase the value to the customer or company? Having some impact statement around how you saved the company X amount of money, and decreased customer turnover by Y, can really go along way to making you more money. I found that focusing on the impact and metrics that increase the company’s bottom line go over really well. The posts that really opened my eyes to this line of thinking were these articles about, Don’t Call Yourself a Programmer, but this totally applied to DevOps folks too. Here’s another one on, How to make yourself more valuable to the company, basically by using your unique knowledge. There was also another really good talk by the same guy, Patrick Mckenzie, also known as patio11 on Hacker News, around salary negotiations. This stuff is pretty invaluable and has personally worked for me when I applied it. This isn’t something you are likely going to use right now, but really helps to know, so you can create an action plan for your next review cycle. Working on these diagrams sort of jogged my memory about this topic so I thought I’d include it. Hopefully you are wildly successful using it.

Anyways, that pretty much wraps up what I wanted to chat about. Hopefully, you will find this episode series useful, I really think it is worthwhile since you can apply lots of this stuff elsewhere too. Before we wrap things up though. I wanted to show you a demo, or proof of concept, that we can actually get audio transcribed using a cloud API.

Over the the console here, I am just going to copy and paste a command for calling Google Cloud’s Transcription API. You can see I am using curl to call the Speech API. I am composing a request with the following configuration, setting the audio file encoding to WAV format, and language we want to use, in this case US English. This option allows you to tag different speakers in an audio recording. So, you might see something like person #1 said this, person #2 said this, this is really useful for transcribing an interview for example. This is pretty crazy that a computer can automatically tag individual speaks and map things out like that. Next, you can specify the machine learning model we want to use, these are tuned for things like phone recordings, videos, etc, but I have been getting the best results using the video model. This line tells the API we want to get word boundaries too, this will give us the time codes for word start and end positions. This would be really handy if we wanted to generate closed captions something. Word confidence will score each word, providing a ranking, using the models confidence that it understood what was said. Next, we ask the API to automatically add punctuation, for things like capitalization, commas, and periods. This is pretty cool, in that the model will guess what punctuation needs to go where. This is not perfect, but can speed things up when you are proofreading, and generally correcting things. Finally, we tell the Speech API where the file is we want it transcribe. In my case, this is sitting in a storage bucket, and the audio file is from episode #54.

Alright, so lets run it.

# submit job

$ curl -s -H "Content-Type: application/json" \

-H "Authorization: Bearer $(gcloud auth application-default print-access-token)" \

https://speech.googleapis.com/v1p1beta1/speech:longrunningrecognize \

--data '{

"config": {

"encoding":"LINEAR16",

"languageCode": "en-US",

"enableSpeakerDiarization": true,

"model": "video",

"enableWordTimeOffsets": true,

"enableWordConfidence": true,

"enableAutomaticPunctuation": true

},

"audio": {

"uri":"gs://XXX-YOUR-BUCKET-XXX/XXX-YOUR-FILE-XXX.wav"

}

}'

The only output we get is this JSON object with our job name.

# returned job name

{

"name": "1733270000003043607"

}

I am just going to copy and paste another command here with that job name as input. Again, we are calling the speech API from the command line here, using the curl command. This number here, is that job number, or name, we got as output from the previous command. Lets run this to check on the status of our transcription request.

# check job status

$ curl -H "Authorization: Bearer "$(gcloud auth application-default print-access-token) \

-H "Content-Type: application/json; charset=utf-8" \

"https://speech.googleapis.com/v1p1beta1/operations/1733270000003043607"

Then, we get this JSON object back.

{

"name": "1733270083763043607",

"metadata": {

"@type": "type.googleapis.com/google.cloud.speech.v1p1beta1.LongRunningRecognizeMetadata",

"progressPercent": 18,

"startTime": "2019-04-04T11:10:44.883846Z",

"lastUpdateTime": "2019-04-04T11:15:37.546914Z"

}

}

For our job, we are at 18 percent processed right now. I am just going to pause the video here, as this takes about 5 minutes to complete. If you keep running this command eventually it will spit out the results. I’ve started the video again. What I have been doing while testing this, is just redirect the output to a text file, where I can then look at the results using a text editor. By the way, the audio file we processed was about 7 minutes long, and it took 5 minutes to process. This file, cost about $0.17 cents to process based off the numbers in our spreadsheet, which might have taken a long time to do manually.

# when complete send to file

curl -H "Authorization: Bearer "$(gcloud auth application-default print-access-token) \

-H "Content-Type: application/json; charset=utf-8" \

"https://speech.googleapis.com/v1p1beta1/operations/1733270000003043607" > 1733270000003043607.txt

Alright, so we have our file saved here. Let me just check the size, in case you are wondering, it is about 1.6MB. Lets use the head command to see what the resulting job start and stop times where. So, you can see it is 100 percent complete, and took just shy of 5 minutes to process from start to finish.

Lets use the less command and browse through the file. By the way, I’ll post a link to the raw file in the episode notes below, if you want to have a look through it. So, in this file you will see tons of JSON blocks, that represent transcribed text from our audio file. You can see it has punctuation too, capitalization, commas, periods, etc. Pretty cool right.

{

"name": "1733270083763043607",

"metadata": {

"@type": "type.googleapis.com/google.cloud.speech.v1p1beta1.LongRunningRecognizeMetadata",

"progressPercent": 100,

"startTime": "2019-04-04T11:10:44.883846Z",

"lastUpdateTime": "2019-04-04T11:15:37.546914Z"

},

"done": true,

"response": {

"@type": "type.googleapis.com/google.cloud.speech.v1p1beta1.LongRunningRecognizeResponse",

"results": [

{

"alternatives": [

{

"transcript": "In this episode we're going to be building a fairly simple web application where we can upload images via dragging and dropping them and then get back useful metadata about these images and Json format. Finally, we'll walk through the steps of container izing this application using Docker. All right, you can see we have an area for dragging over the images here and then over on my desktop. We have a bunch of image files. So let's drag over the first image and get a feel for how this application actually works.",

"confidence": 0.8961378,

"words": [

{

"startTime": "7.100s",

"endTime": "7.500s",

"word": "In",

"confidence": 0.8724281

},

{

"startTime": "7.500s",

"endTime": "7.600s",

"word": "this",

"confidence": 0.9128386

},

{

"startTime": "7.600s",

"endTime": "7.900s",

"word": "episode",

"confidence": 0.9128386

},

...

Then, if we scroll down here, you can see each word is broken out, with start and end times, along with a confidence score. Each word throughout the transcript will have this. So, depending on how long your audio file is, this could be quite a big file.

Actually, lets jump back to the command line and see how many lines this file has. Yeah, so about 52 thousand. So, this is the speech translation API in a nutshell. The IBM watson one and AWS Translate are pretty much the same. As you can tell, this is not really user friendly from a non-technical persons perspective.

This is why adding a super simple user interface here could be game changing. You just enter your email, drag and drop your file, then click submit. In the background we go and coordinate all the file processing, jobs shuffling, and just send you the processed and cleaned text results.

Alright, well that’s it for this episode. Please let me know what you think about these types of projects. I wanted to complete the one from episode #51 too, around building out a car pooling service. I think it helps to add some variety from week to week, if we mix in these types of things. I’ll cya next week. Bye.