- Cloud Run

- Announcing Cloud Run

- Cloud Run Quickstart: Build and Deploy

- Function as a Service

- AWS Lambda

- AWS Lambda FAQs

- AWS Fargate

- AWS Fargate FAQs

- Google Cloud Functions

- Top 10 Uses of a Worker System

- HN Thread | Cloud Run beta pricing

- HN Thread | Cloud Run – Newest member of our serverless compute stack

- asmttpd

- FROM scratch

- jweissig/69-cloud-run-with-knative | Github

In this episode, we’ll look at the recently launched Cloud Run Beta. It is a managed service, that runs stateless containers, invocable via HTTP requests. Also, we’ll look at the differences between Cloud Run, Lambda, and Fargate. There are demos too.

The reason, I wanted to check the Beta of Google’s Cloud Run out, is that there is lots of innovation happening around the single instance managed container stuff. The code idea here, is you just tell one of these Cloud Platforms that you want to run one of your containers, with a specific amount of CPU and Memory, and they look after all the gory details behind the scenes. You do not need to worry about orchestration and all the other infrastructure stuff. Which is pretty neat. But, the really cool thing about this, is that you only pay for the amount of CPU and Memory your container is using while servicing your requests, other than that, you do not pay anything. So, this can be super economical too for certain types of workloads.

Before we dive in, I wanted to chat about where I see Cloud Run fitting into the larger Containers In Production Map. If you haven’t seen this map before, this is sort of a high-level map of where, I see DevOps tools fitting into the ecosystem. So, at a high-level, I see Cloud Run fitting in around this Orchestration category. But, we are not the ones running it, we pass all that over to a Cloud Provider. Personally, I like to find how new tools, are similar to what I already know, then it seems to shorten the learning curve. So, lets do that. I see many similarities between Cloud Run, and things like, AWS Lambda, AWS Fargate, and Google Cloud Functions. But, how is Cloud Run the same, or different, than these other products?

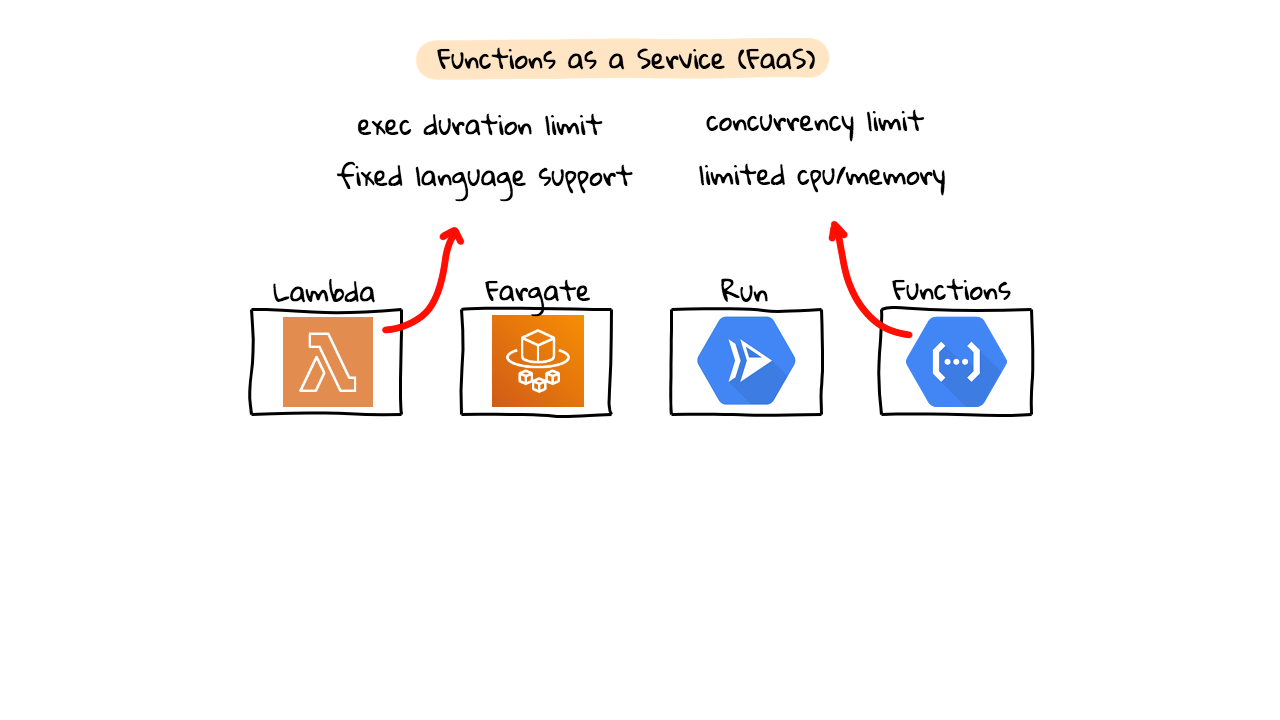

Well, I would put these four products into two broad categories. First, you have Functions as a Service (FaaS), and I see both AWS Lambda and Google Cloud Functions fitting into this category. You basically just define the functional code you want to deploy, things like sending emails, converting images, processing messages in a queue, etc, and the Cloud providers handle all the behind the scenes stuff for you. You only pay for what you use too, so you don’t have to have a bunch of infrastructure sitting around idle. This is serverless in a nutshell, well there are servers, just not your servers. So, you are serverless, not them.

However, there are limitations to this. For example, AWS Lambda and Google Cloud Functions both have limited code execution times. Lambda supports 15 minutes, Google Cloud Functions support 9 minutes, after these timeouts your function is terminated. Both, also have a fixed number of languages they support, so you cannot just run any old code or custom library you want. Next, there are concurrency limitations to how many requests you can actually send to these functions at once. Finally, there are also limits to how much cpu and memory you can assign to these things. There are other things too, but this gives us enough information for now, to see how things like AWS Fargate and Google Cloud Run are different. So, this mental model, is what I think of when people mention Functions as a Service (FaaS).

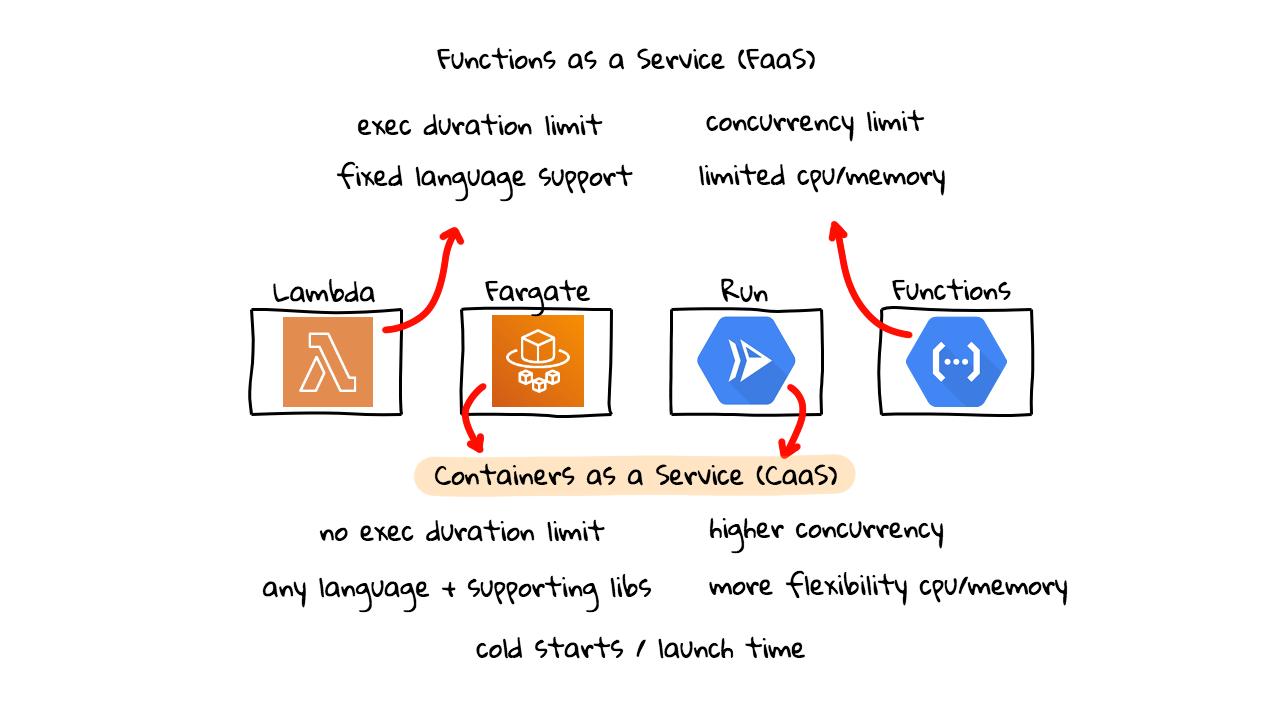

So, where do products like Fargate and Cloud Run fit in? Well, this is what I think of as Containers as a Service (CaaS). It looks very similar to what is happening above here with the Functions as a Service stuff (FaaS). We are doing something very similar here, we are asking a Cloud Provider to run some of our code, but this time it is packed in a Docker Container. Plus, there are some differences for the above limitations. Instead of having an execution time limit, there is no execution time limit. You are just charged by the amount of CPU, Memory, and other resources you use, things like networking, etc. Next, you can use any language, code, and supporting libraries you want, because this is all wrapped in a container, which you have full control of. I’ll show you a demo of this later. There is also much higher request concurrency allowed. You also have more flexibility in the amount of CPU and Memory options. Although, as you’ll see, Cloud Run is still pretty limited today. The AWS stuff is much more mature. But, there are some limitations with these offerings too. If you go down this route, and install tons of stuff in your containers, the cold start, or booting time, can be pretty long (for real-time applications). So, you want to optimize as much as possible. So, this is what I think of as, Containers as a Service (CaaS).

So, what’s the difference between these two categories of tools? Well, from an end user perspective, these likely act and appear very similar. However, from a programming/devops perspective, the Functions as a Service category, offers a narrower criteria for what you are allowed to do. So, if you need something a little more complex, then you might explore things like Fargate and Cloud Run. This episode, will be a first look at Cloud Run, and I was thinking we should check out Fargate shortly too, so we can compare things. You might be thinking, what are some use-cases for this heavier weight container option? Well, say you need to do things like complex image processing and need very custom software libraries, you could easily do this with containers. Maybe you need some complex data extraction using custom R scripts or something. You can basically do anything you want, using these tools down here, as you have complete control over what goes into the container. There are all types of use-cases for things like, data extraction, conversion, webhooks, sending notifications, etc.

Before, we dive in too much. There was a pretty good launch announcement blog post last week that outlines many of these concepts too. It’s worth a read if you’re interested in this space. There was also two really good Hacker News threads around this topic. There was lots of comments about the differences and similarities between Lambda, Fargate, and Cloud functions. A few of the developers and product managers from the Cloud Run team were in there talking about roadmap too. So, this is pretty interesting even if you’re totally on AWS. As, it signals where things are headed in this space. So, I thought it was worth pointing out.

Alright, so I just wanted to jump over to the document pages for a minute and call out a couple things as it will help explain what’s happening in the demos. So, when you’re running your custom container code, you likely will want to connect to things like storage, databases, cache servers, etc, to grab or process data. This also applied to Lambda, Fargate, and others too. You want to check the dependencies to make sure these are supported. Just something to be aware of.

Next up, this page walks you through what’s happening behind the scenes. So, when we ask Cloud Run, to run our container, it will create something called a service, and our containers back that. You can also have multiple revisions of services as we iterate on things. Next, here’s a page that chats about how request concurrency works. Basically, you can control how many requests your service can handle at once, before you want things to kick in behind the scenes, and scale your container instance counts up.

Next, we come to pricing. Cloud Run charges you for the resources you consume, rounded up to the nearest 100 milliseconds. Which is pretty crazy. Here’s a breakdown of what CPU and Memory costs are like. Down here, there is a diagram that helps explain how things are billed when a few overlapping requests come in too. This is likely way cheaper and less operations overhead than running your own Kubernetes cluster. Something, I haven’t mentioned yet, is that since with is based on the knative project, and knative is open-source, you can run this on your own Kubernetes clusters too. So, this workflow should be totally transferable off the cloud too. But, for me, I think the interesting part of all this, is the managed service offering bits.

Finally, I wanted to quickly call out quotas. This is often overlooked and applies to AWS too. If you are looking to use this type of stuff, check out the quotas and limits pages, as they walk you through max cpu, memory, request sized, etc. These pages will save you tons of troubleshooting time.

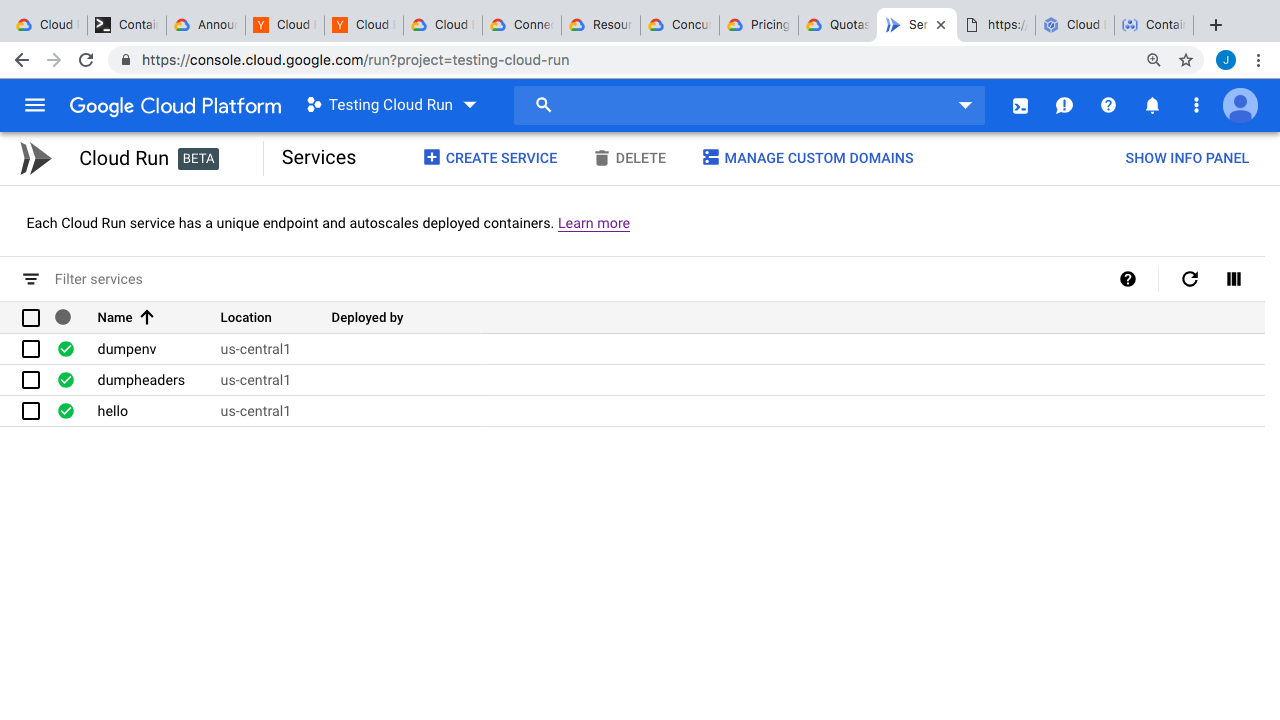

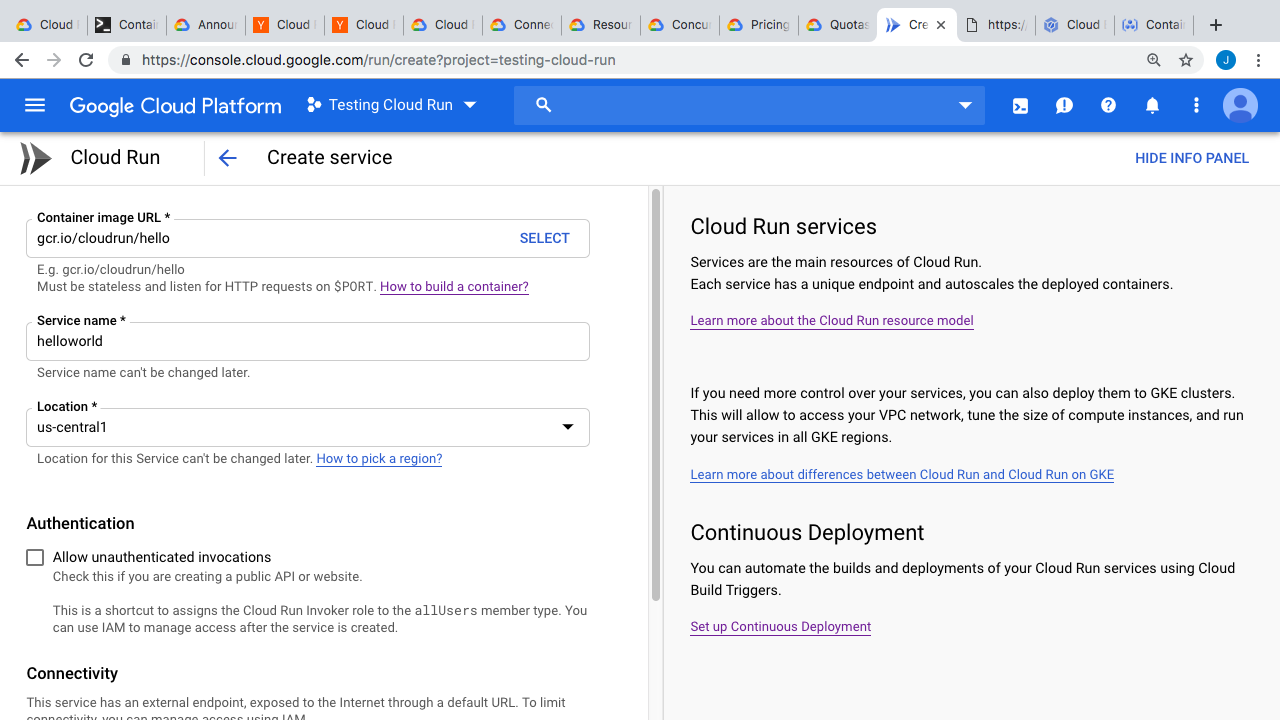

Alright, demo time. So, I have the Cloud Console loaded up here. Again, I am thinking in a few weeks here, we’ll do these same demos on AWS Fargate too. So, under this hamburger menu, you can scroll down into the compute area, and find Cloud Run. This is the interface, and my candid comments are it’s pretty sparse, and still looks like a very early Beta.

You can create a service here. Just specify the container you want to run, I’m just going to copy and paste the Hello World demo here, you can give it a name too. Right now, it looks like the only region that is available is us-central1. I am going to check this box, to allow external traffic from the internet to this container, and then click Create.

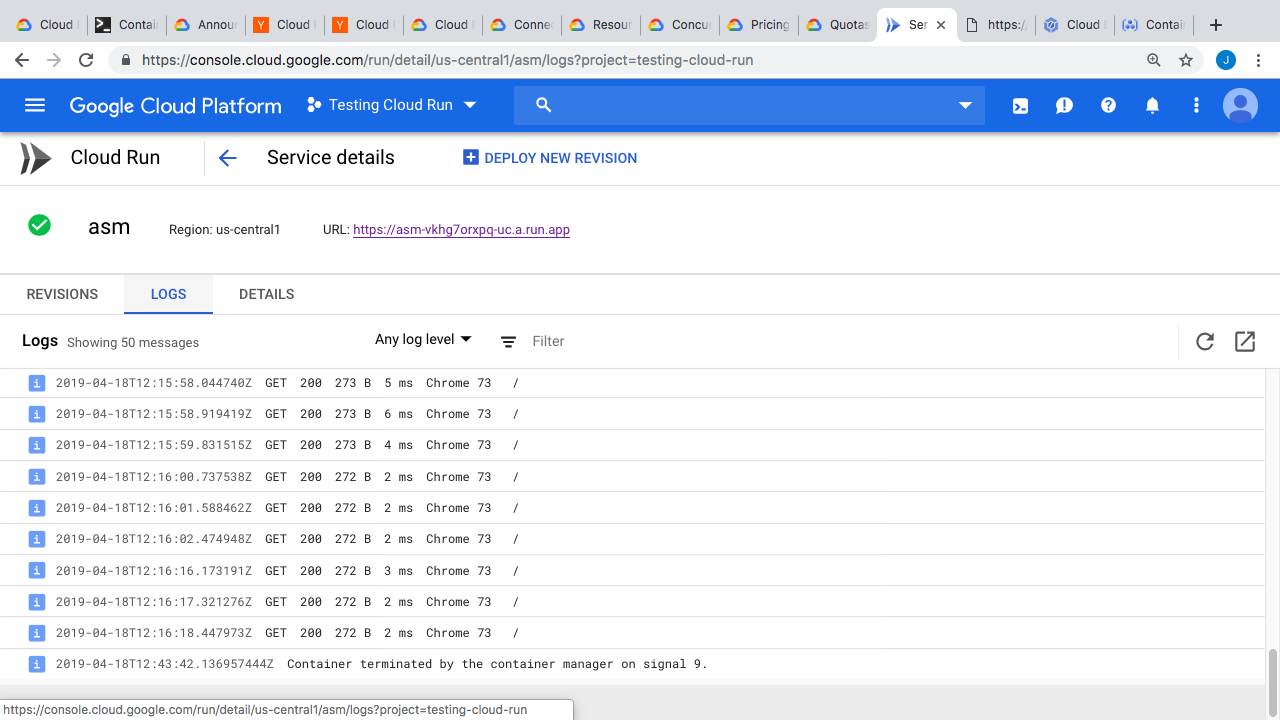

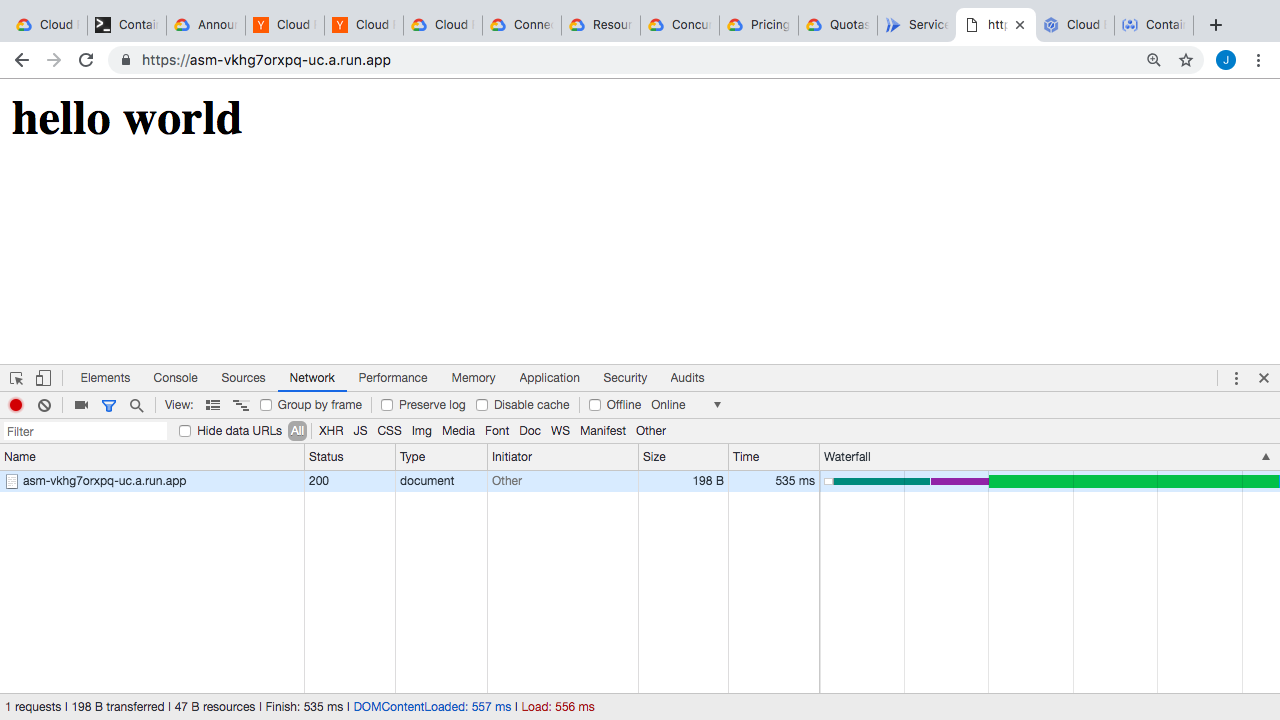

What’s happening behind the scenes here, is that Cloud Run is pulling down this container, creating a service revision, routing traffic, and allowing external access to it. Then, you get this nice little web link that you can click. So, this page here is running off a container we launched, but we are not managing any of the infrastructure. All of that is taken care of for us. Plus, you are only paying, when you are using compute and memory for requests. Pretty awesome right. Back at the console, you can do things like view logs, and you can see our requests in here. There is a details tab too. Not much in here though. Back on the services revisions page, you can see the service details, like the container image we are using, the memory, and concurrent connections allowed. You can also view the YAML file here.

So, that’s the concept in a nutshell. Basically, take your container images, and run it on their infrastructure. They handle all the backend under the hood stuff. I was sort of interested in running a few of my own containers as demos though. So, one of the first questions I had was, what environment variables are exposed to your application, in the container, when you make a request? Well, lets jump over to an editor and look at a few containers we’ll be testing out.

So, we already looked at this hello world example. I downloaded the example code from the documentation pages and there is really nothing too it. Just a simple web server and Dockerfile. The core idea though, is that you have total control over what you put into your containers, so you can build to your exact needs without narrower constraints.

So, this is the container that will dump all environment variables when we connect to it via our browser. We’re just grabbing the hostname, all environment variables, and printing that out. Then, here’s the Dockerfile. We’re copying our code, compiling the program, and then setting the web server as the launch command.

Lets jump to the command line and deploy this. I’ll link to the quickstart documentation where you can find all these commands. Also, I’ve posted all my demos to my github page. Links are in the episode notes below.

Alright, so first I need to initialize things with Google Cloud since we’re doing this from the command line. Just going to set my default region to us-central1 since that’s the only one that works with Cloud Run for now. Next, I’m going to set my working project, to testing-cloud-run, the one that I created for this demo.

$ gcloud config set run/region us-central1 Updated property [run/region]. $ gcloud config set project testing-cloud-run Updated property [core/project].

Now, lets submit the code for building this container. What’s happening here, is we’re using Cloud Build, to build the container behind the scenes. Maybe, check out episode 58, as I go into more details on this there. We can flip over to the console too and watch this happening live, and here’s the output of the container build process. Then, if you go over to the docker registry, you can see our image here. This image, is what we want to deploy into Cloud Run. So, lets jump back to the command line and deploy it.

$ gcloud builds submit --tag gcr.io/testing-cloud-run/dumpenv $ gcloud beta run deploy --image gcr.io/testing-cloud-run/dumpenv

Lets run this gcloud beta run deploy step pointing at that newly created Docker image. It prompts us here for a few things, like the name, and if we want to expose this publicly. Then, it launches it and we get this link. But, before we check that, lets head back to the console and verify things look like we would expect. I’m just going to refresh this. Cool, so you can see the new service we just launched here. By the way, I blurred this out area, since it has some of my account details listed here.

So, lets click in here and open up that link and connect to our newly launched container. So, here’s the environment variables exposed inside our container. Mostly, just application specific stuff and nothing too interesting. I can just reload it a few times here. The thing to note here, is you’re only paying for when this thing is actually servicing a request. So, the request has completed and we are not being charged anything. This could be pretty awesome for lots of use-cases, where you need something pretty complex, but only once and a while.

Arlight, so what about the http headers? You think there is anything interesting in there? Lets find out by jumping back to the editor. I’m using pretty much the same code here but this time dumping all the http headers. There is also another change too, in the dockerfile here, before we were using the alpine base image. This time, I am just using the scratch image, since we are statically linking the Go binary here. What this means, is that there is nothing else in the container, except our binary. So, this makes things a little smaller, but also more secure, as there is nothing else running in here. I mostly just like it because it’s sort of cool.

$ gcloud builds submit --tag gcr.io/testing-cloud-run/dumpheaders $ gcloud beta run deploy --image gcr.io/testing-cloud-run/dumpheaders

Lets jump over to the command line and run those build and deploy steps again. I’m just going to speed this up heavily, since you already know whats happening here, alright it’s done. Lets open up this link. Cool, you can see the http headers are dumped out now too. Again, there is nothing too interesting in here. But, this might help debug things, say you wanted to get the users IP address or something, and were wondering what headers you had access to.

Lets head back to the Cloud Console. So, we now have our three containers running here, and it doesn’t appear to take all that long to deploy things. But, we’re doing some pretty simple things here, without much complex code or logic. I was wondering though. How small of a container could we create? We already tested out the scratch image, and if we check the registry for that dumpheaders container, you can see the image is around 4 MBs.

But, I was wondering if we could go smaller. So, after a bit of searching around, I found this web server written in assembly code, and it’s roughly 6 KB. So, I compiled that code, and created a Dockerfile using the scratch image again too. The scratch image, is basically an empty container with nothing in it, there is no operating system, directories, nothing. So, this should be super small.

FROM scratch ADD web_root /web_root ADD web-asm /web #ADD sample.txt.gz /sample.txt.gz CMD ["/web", "./web_root", "8080"]

So, lets jump to the command line and run the build step here. I sped this up a little but not much as this went super fast. Alright, so lets check out the docker registry. So, this docker image is 3.1KB. Pretty awesome right. Just think about this, we have a assembly web server, sitting inside a totally blank docker container, and we are deploying live traffic to it. So, lets jump back to the command line and deploy this thing. Again, I sped this up only slightly as it is super lightweight.

$ gcloud builds submit --tag gcr.io/testing-cloud-run/dumpheaders $ gcloud beta run deploy --image gcr.io/testing-cloud-run/dumpheaders

Lets check out this link. Pretty cool, right? I’m just going to open the developer tools and watch what the request latency in here. So, we’re getting around 300ms per request. I guess this is the time for the container to launch as our requests is coming in? Okay, so this is a tiny container and no one is going to do this in real life, so lets try something a little larger. I created this 800MB compressed file filled with random data. I am going to add it into the container and see what happens. Typically, with Docker containers, they get compressed in the registry. But, since this is random data, and I already compressed it, we are actually going to be deploying a 800MB container. This is what a more complex application might look like, running a base operating system, your code, along with all your dependencies.

FROM scratch ADD web_root /web_root ADD web-asm /web ADD sample.txt.gz /sample.txt.gz CMD ["/web", "./web_root", "8080"]

So, lets uncomment the line in the Dockerfile to add this file in. Then, lets run the build step. I’m going to speed this up heavily as I am uploading 800MBs over my internet connection here. That took about 15 minutes. Now, if we check the docker registry, you can see there is a new version of our assembly container, and it is around 800MBs.

Lets go back to the console and deploy it. I had to speed this up quite a bit too, as I suspect a larger image like this, just takes more time. Behind the scenes, they need to pull the image, find a place to run it, route traffic, etc. Alright, so we have the link now. Lets open it. So, you can see it is a little slower to start, and then we are getting around the same speed as before. This is interesting. In that, they must be doing lots of caching behind the scenes and have things prepared for launching quickly, because this is an 800MB images, that we are launching here.

Alright, well that’s my first look at Cloud Run, and we got to look at what a few similar products looks like, those being Lambda, Fargate, and Cloud functions. They sort of function similar for end users but the implementation is way different. I really hope this trend of managed single container instances continues, as this could likely simplify lots of the lower hanging operation fruit out there.

Alright, well that’s it for this episode. Hopefully you found that interesting. I like to check this new stuff out, just because it gives you a sense of where things are headed, and you never know where you might find a use-case for this. Thanks for watching and I’ll see you next week. Bye.