- Episode #48 - Static Sites using AWS S3, CloudFront, and Route 53 (1⁄5)

- Episode #49 - Static Sites using AWS S3, CloudFront, and Route 53 (2⁄5)

- Marco – One Page Theme

- Bootstrap - The world’s most popular mobile-first and responsive front-end framework.

- Google - bootstrap free theme

- Github: laurilehmijoki/s3_website

- Jekyll - Simple, blog-aware, static sites

- jekyll-import - Import your old and busted site to Jekyll

- Youtube: Intro to Jekyll

- AWS Identity and Access Management (IAM Best Practices)

- Google - leaked aws credentials

- AWS - Monitor Your Estimated Charges Using Amazon CloudWatch

- AWS S3 Regions and Endpoints

- http://websiteinthecloud.com

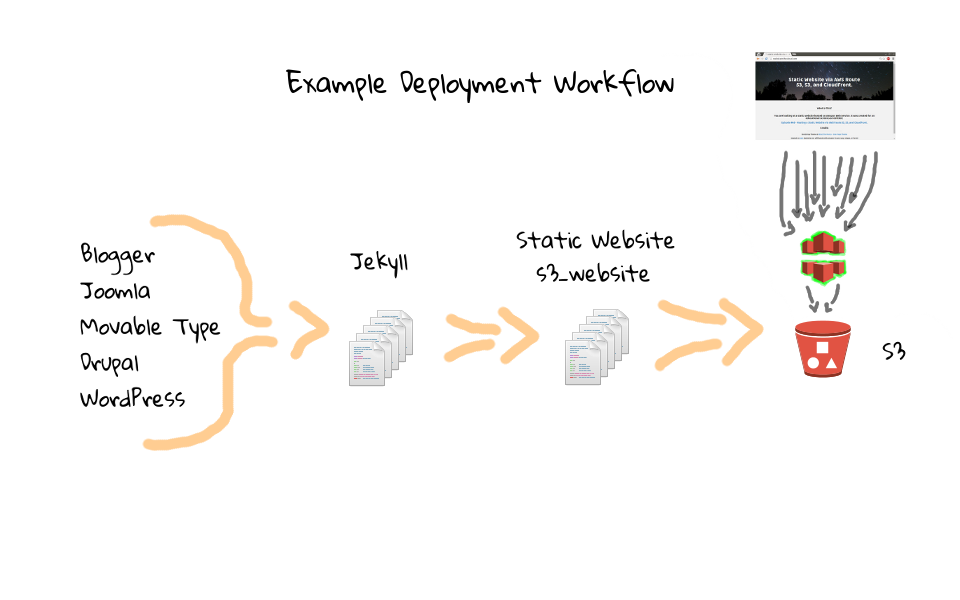

In this episode, we going to look at an example deployment workflow, for syncing static website files from a local directory, to a remote S3 bucket.

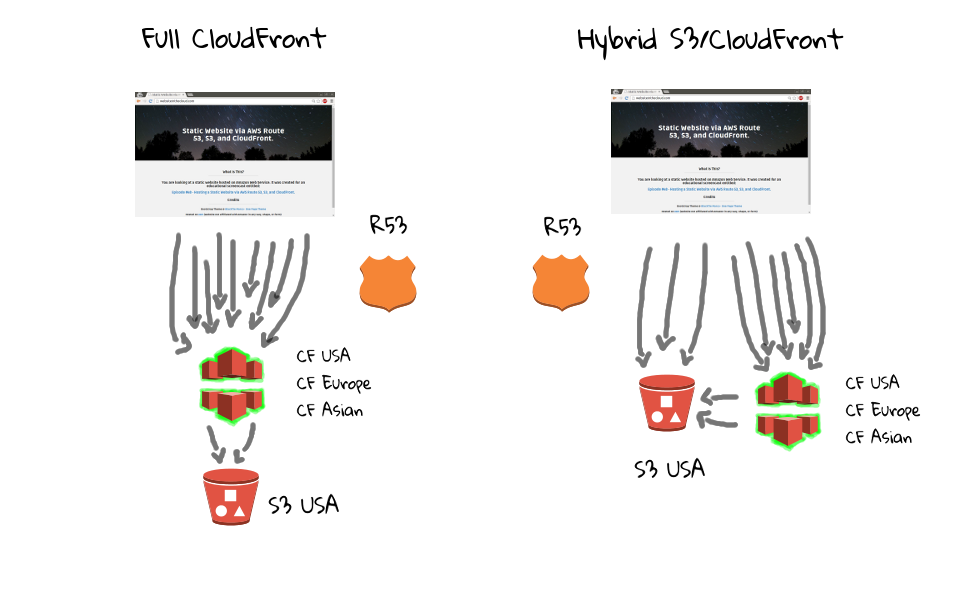

Back in part two of this series, we created the websiteinthecloud.com S3 bucket, which acts as the foundation for our two example static hosting environments. But, this diagram is missing an important piece of the puzzle, that being the workflow for pushing content into a S3 bucket on a large scale.

I have put together a few diagrams, which should help in describing the deployment workflow, where we can kind of reverse engineer how the process works at a high level. So, on the far right, we have our websiteinthecloud.com S3 bucket, which we configured in the last episode. Then, lets say we have roughly fifty static website files, sitting on our local machine, that we want to push into the S3 bucket.

For the example website we are going to upload today, I downloaded a free one page bootstrap theme, from BlackTie. If you have not seen it before, Bootstrap is a really popular website framework, which greatly speeds up web design, and there are tons of free themes out there too, you can just Google them. For this theme, there is something like 10 nested directories, 40 plus files, mostly css stylesheets, images, javascript, and some html.

So, how do we go about getting this downloaded bootstrap themed site into S3? Sure, you could use the S3 management console to upload these files manually, but this will quickly become a major pain point, as you would constantly be creating directories, uploading files, creating more directories, uploading more files, and generally babysitting the upload process. There must be a better way, right? Well, that is where the s3_website package comes into play, as it allows you to sync files from your local computer, into a S3 bucket, very similar to rsync, but for S3.

Lets jump over to the s3_website project page on Github. So, s3_website is an active open-source project, with very extensive documentation, along with many examples use cases. But, at its heart, s3_website simply allows you to sync a local folders contents, into a remote S3 bucket. A little later, we are going to look at a demo, of getting s3_website installed, configured, and actually pushing our example bootstrap themed site into the websiteinthecloud.com bucket. The goal is to give you a really good idea, of how we can automate content uploads to S3, without babysitting the process too much.

While doing research for this episode, I found that many people are using a tool called Jekyll, to generate static website content from a dynamic blog, then pushing to Github or S3. I do not want to spend too much time talking about this, as it is highly dependant on your needs, but it is worth mentioning.

For the demo today, we will use a bootstrap theme as the basis for our example site, but say you wanted to dump an existing dynamic blog into static files? Well, Jekyll allows you to do just that. Basically, Jekyll creates a markdown version of your site, and its own folder structure, then you can just use a command to export your static site for upload. The reason you use these intermediary markdown files, is that you do no need to work with html directly, markdown is an easy to read and write plain-text syntax. You can generate these Jekyll markdown files, by using the dynamic blog import tool called, jekyll-import. There are plugins for around 20 plus blog platforms too. There is an, Intro to Jekyll, Youtube video linked in the episode notes below, and it is does a great job of explaining Jekyll from the ground up.

So, the workflow to go from a dynamic blog, to a static site, would look something like this. Use jekyll-import, to dump the dynamic blog into markdown files, from Jekyll you convert these markdown files a static version of your site, then use s3_website, to upload the static files into S3.

This might look complex, but it is really only a couple commands, and can be automated rather quickly. But, why would you want to convert a dynamic blog, into a static format, and upload it to S3? Well, there are a whole host of reasons, like reduced cost of running a virtual machine, performance gain of serving static content, and security. Security is actually a huge one. Blog software is an extremely big target, there are often bots crawling the internet just looking for vulnerable sites, sometimes even within 24 hours of bugs being found. So, if you are running a static site, performance is typically better, there is a reduced Ops overhead, and you do not need to worry about getting hacked as easily.

Just to complete the picture here. In the following two episodes, we are going to configure CloudFront to pull files from this S3 bucket, then attach our websiteinthecloud.com domain, to this CloudFront distribution. This slide petty much sums up what this series is all about, by giving you a high level overview of the end to end workflow, and how files from your system are ultimately delivered to end users.

For this episode, we are only going to focus on using s3_website to sync static content from our local system, into a remote S3 bucket. If there is demand, I can do something on Jekyll too, but for this series, it is a little out of scope. Hopefully my explanation was not too overboard with these diagrams and workflows examples, just like to set the stage with an example problem, then we can solve it.

Okay, so now that you know what we are trying to do, and why we are trying to do it, lets get our hands dirty. We can start, by jumping back over to the s3_website page, if we scroll down, just below the, what s3_website can do section, there are instructions on how to get s3_website installed, then a walk through of getting it configured.

To install s3_website, we just run gem install s3_website. You will need to have Ruby and Java already installed on your machine, so if you do not have these installed, you will need to figure that our first.

Down here, we can walk through what the configuration process looks like. First, we create AWS API credentials, with sufficient permissions to access our websiteinthecloud.com S3 bucket. I will talk more about this in a minute though, as this plays into an AWS best practice. Next, we a create directory for our static site, use s3_website cfg create, to generate a config file called s3_website.yml, and then we update this config file with our AWS API credentials. Do not worry about this too much, as it will all become obvious, when we it in the demo.

You can actually use s3_website to create the S3 bucket for you, but we have already done that in the previous episode, as I wanted to show you what it looked like via the S3 management console.

Finally, when we have everything configured, we just type s3_website push, and s3_website will read in the config file, access AWS S3 via your API key, and upload our static website files. What is cool about this, is that it figures out what needs to be synced, in that is does a diff of what is currently in the S3 bucket verses your local static site directory, only pushing the changes out. This reduces tons of manual work into a single command, along with being quite efficient, both in terms of upload speed, and bandwidth used.

Lets circle back, to this, create API credentials tasks for a minute. Where we create new credentials, and put them into the s3_website.yml config file. If you are new to AWS, I just wanted to chat about two things that will totally save your butt, in the event things go bad. The first, is to create dedicated role accounts for each tool, then lock these accounts down to the bare minimum permissions needed. In this episode, our s3_website tool only need access to a specific S3 bucket, websiteinthecloud.com, so I have created a dedicated role account, that can only access this bucket. Just like on a UNIX system, how you would not give out root access to apache, mysql, regular users, or create shared accounts, the same goes for AWS. Your default AWS credentials can do anything on your account, it is much like root on a UNIX system, in that it can launch tons of virtual machines, chew through mountains of storage, or delete pretty much anything tied to your AWS account.

There is actually a great AWS Identity and Access Management best practices guide, which walks you through securing your AWS account, and it is a must read. Role accounts become extremely important from a security standpoint, in that often times you will want to automate things via API calls, or through the use of automation tools, just like with s3_website, where we are embedding credentials into a config file, or many times an environment variable.

Lets apply this to our s3_website automation tool for a minute. Say, we create a role account, but do not lock down permissions to just a specific S3 bucket and CloudFront. Well, if we accidentally leak this API credential, either through uploading the configuration file to Github, or maybe through a demo, then someone can easily have free rein on your AWS account, just like if you shared a root password with them.

I hate to dwell on this, but just about every month or so, I read a story about how someone uploaded, or otherwise leaked, their unrestricted AWS account credentials, often times while checking code into a public Github repository. This goes unnoticed for a few days, until they log into their AWS account, and see a hundred virtual machines all mining Bitcoins or something, never mind the 10 thousand dollar AWS bill they now have. You need to treat API credentials with extreme care, but if one does leak out, make sure the account is limited in what is can do, this is where the Identity and Access Management best practices guide comes into play, as it walks you through all of this.

When these things happen, to Amazons credit, each and every story that I have seen, AWS credited the account, and removed these huge billing charges. My intent is not to scare you off AWS, just that role accounts, with least privileges assigned, will greatly limit the scope of an already stressful security event. Luckily, it only takes a couple minutes, and this best practice guide tells you everything you need to know.

The second recommended best practice, is to configure billing alerts, especially since AWS is pay as you go. The idea is that if you think your bill is going to be around 15 dollars a month, then configure an alert for slightly more, say 20 to 25 dollars a month. Say, you are testing something, and accidentally leave a large virtual machine running, or there is a huge spike in download bandwidth charges on one of your sites, or some background process is all of the sudden chewing through tons of storage without you knowing, you could find a much larger bill than expected. A simple billing alert will give you a heads up before a big surprise bill. Again, this only takes a minute to configure, but will save you tons of hassle and stress.

Armed with these two best practices, limited privilege role accounts, and billing alerts, you will definitely be ahead of the game, and I cannot recommend them enough.

Okay, enough about best practices, and workflow diagrams, lets go and setup s3_website. Off camera, I have created my role account for this episode, and there is a nice little guide here in the s3_website documentation, which gives you a policy for locking down your role account to a specific bucket S3. Basically, this is a policy, which you can assign to a role account, that will limit its access to only a specific S3 bucket, along with CloudFront. I did not show you how to create role accounts for a reason, as I think it makes sense to go through the guide, because it is packed with extremely helpful advice.

Lets jump to the command line and get started. First we need to install s3_website, by running gem install s3_website, again you will need to have Ruby and Java installed as they are dependencies, but I already have that done on my system.

[~]$ gem install s3_website Fetching: s3_website-2.8.6.gem (100%) Successfully installed s3_website-2.8.6 1 gem installed

Next, we need to create a project directory for the static website content. I have already created one called e50, so lets change into it, and list the contents. You can see this, _site directory, and s3_website will by default look for our static site in there, this is configurable though. Lets run tree, against our _site directory, you can see that there are a bunch of files and directories in there, this is that single page bootstrap theme we looked at earlier. You can download it in the episode nodes below too.

[~]$ cd e50 [e50]$ ls -l [e50]$ tree _site

So, we have our project directory configured, and the _site directory with our example content. Lets generate a new s3_website config file, by running s3_website cfg create. Then, lets open this up in an editor, so we can what a look.

[e50]$ s3_website cfg create Created the config file s3_website.yml. Go fill it with your settings.

[e50]$ subl s3_website.yml

Right away, you can see where we need to put our s3_id, s3_secret, and s3_bucket strings. The first two, s3_id and s3_secret, are the credentials that you created for your restricted role account. The s3_bucket is the bucket you want to deploy too. I am just going to paste my values in here, whoops just leaked some private API keys, good thing this is just examples of what real values look like. You can see how easy it would be to give a demo, and leak keys, or accidentally check this file into public version control, you get the idea.

s3_id: AKIAIOSFODNN7EXAMPLE s3_secret: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY s3_bucket: websiteinthecloud.com

There are a bunch of setting you can tweak in here and for the most part defaults are okay. But, if you have questions, just check the s3_website Github page, as it is pretty good. The one thing you should fix, is this s3_endpoint, which points to the data centre our bucket is physically housed in. Luckily, this is pretty easy to figure out, so lets head to the AWS S3 management console for a second. If you select the S3 bucket properties, over here on the right, you can see the region is US Standard, but that does not tell us the exact value we need. Lets, expand the static hosting option here, and you can see this endpoint link. Well, in this link, you can see this s3-website, then us-east-1, and that is the value we want. Lets switch to that tab, yeah, up here, this might be a little easier to see, we have our us-east-1 value.

Depending on the region you picked, there could be a wide range of values, but there is a good documentation page which can help. As always, all links can be found in the episode notes below, so do not worry about remembering this. So, you can see here a listing of region specific end-points, which you can use if sending REST API calls, like what we are doing with s3_website. Then down here, there is a long table of region names, to region end point values. Us-east-1, us-west-2, eu-west-1, etc. You get the idea.

Okay, so armed with our us-east-1 value, lets head back to the editor, and uncomment this s3_endpoint value. Now, us-east-1 is the default in here, but there is likely a good chance you did not pick this region, so I just wanted to make sure you did no run into an error, when we deploy our site.

s3_endpoint: us-east-1

At this point, we are done with all the config steps. We have our role account API key setup, specified the bucket to use, along with the region s3_website should send commands to. So, lets save this, and head back to the command line.

Everything should be lined up now, we know what the workflow looks like, cover some good AWS best practises, configured s3_website, and have our example content sitting in the _site directory, so lets push this out. Actually, wait a sec, we should probably quickly review what the before site looks like, so we have something to compare with. So, we have a site that says, Hello World, Testing one, two, three. Lets head back to the command line, and update this test page, with our slick looking one page bootstrap themed site.

[e50]$ ls -l total 8 -rw-r--r-- 1 jw jw 1270 Apr 29 23:37 s3_website.yml drwxr-xr-x 3 jw jw 4096 Apr 5 01:59 _site

We just need to run s3_website push.

[e50]$ s3_website push [info] Deploying /home/jw/e50/_site/* to websiteinthecloud.com [succ] Deleted 404.html [succ] Created assets/img/portfolio/.DS_Store (application/octet-stream) [succ] Created assets/img/loader.gif (image/gif) [succ] Created assets/img/cta/.DS_Store (application/octet-stream) [succ] Created assets/.DS_Store (application/octet-stream) [succ] Created assets/img/team/.DS_Store (application/octet-stream) [succ] Created assets/img/.DS_Store (application/octet-stream) [succ] Created .DS_Store (application/octet-stream) [succ] Created assets/img/clients/client02.png (image/png) [succ] Created assets/img/portfolio/port03.jpg (image/jpeg) [succ] Created assets/img/portfolio/port04.jpg (image/jpeg) [succ] Created assets/img/clients/client04.png (image/png) [succ] Created assets/img/clients/client03.png (image/png) [succ] Created assets/js/classie.js (application/javascript) [succ] Created assets/js/modernizr.custom.js (application/javascript) [succ] Created assets/js/imagesloaded.js (application/javascript) [succ] Created assets/img/portfolio/port01.jpg (image/jpeg) [succ] Created assets/img/clients/.DS_Store (application/octet-stream) [succ] Created assets/img/team/rebecca.png (image/png) [succ] Created assets/js/bootstrap.min.js (application/javascript) [succ] Created assets/js/.DS_Store (application/octet-stream) [succ] Created assets/img/portfolio/port06.jpg (image/jpeg) [succ] Created assets/js/AnimOnScroll.js (application/javascript) [succ] Created assets/js/retina.js (application/javascript) [succ] Created assets/img/clients/client01.png (image/png) [succ] Created assets/img/portfolio/port02.jpg (image/jpeg) [succ] Created assets/img/portfolio/port05.jpg (image/jpeg) [succ] Created assets/js/hover_pack.js (application/javascript) [succ] Created assets/fonts/fontawesome-webfont.woff (application/octet-stream) [succ] Created assets/img/team/gianni.png (image/png) [succ] Created assets/img/bg01.jpg (image/jpeg) [succ] Created assets/css/.DS_Store (application/octet-stream) [succ] Created assets/fonts/glyphicons-halflings-regular.woff (application/octet-stream) [succ] Created assets/css/main.css (text/css; charset=utf-8) [succ] Created assets/css/hover_pack.css (text/css; charset=utf-8) [succ] Created assets/fonts/glyphicons-halflings-regular.ttf (application/x-font-ttf) [succ] Created assets/img/team/william.png (image/png) [succ] Created assets/fonts/fontawesome-webfont.eot (application/vnd.ms-fontobject) [succ] Created assets/fonts/glyphicons-halflings-regular.eot (application/vnd.ms-fontobject) [succ] Created assets/fonts/glyphicons-halflings-regular.svg (image/svg+xml) [succ] Created assets/css/colors/color-74c9be.css (text/css; charset=utf-8) [succ] Created assets/css/animations.css (text/css; charset=utf-8) [succ] Created assets/fonts/FontAwesome.otf (application/x-font-otf) [succ] Created assets/css/font-awesome.min.css (text/css; charset=utf-8) [succ] Created assets/css/colors/.DS_Store (application/octet-stream) [succ] Updated index.html (text/html; charset=utf-8) [succ] Created assets/img/cta/cta01.jpg (image/jpeg) [succ] Created assets/css/bootstrap.css (text/css; charset=utf-8) [succ] Created assets/img/cta/cta02.jpg (image/jpeg) [succ] Created assets/fonts/fontawesome-webfont.ttf (application/x-font-ttf) [succ] Created assets/fonts/fontawesome-webfont.svg (image/svg+xml) [info] Summary: Updated 1 file. Created 49 files. Deleted 1 file. Transferred 2.4 MB, 1.5 MB/s. [info] Successfully pushed the website to http://websiteinthecloud.com.s3-website-us-east-1.amazonaws.com

As you can see, there is a bunch of output as we sync our local _site folder, with the remote websiteinthecloud.com S3 bucket. If you tried to do this via the S3 web interface, it would quickly become unmanageable, especially if you wanted to only sync changes, as it might not be obvious what needs to be uploaded.

This goes back to our workflow diagram. In that, with just a few minutes of work, we can now sync large amounts of data, with just a single command, no babysitting required. This would work really well via some automated method too. Say for example, that you wanted to go the Jekyll route, you could have a little script that would dump the static content, then push it out via s3_website.

Lets head to the browser and check out what the final product looks like. So, we have our cool little header up here, nice background image, and some simple text describing what this is all about. Well, that is about it for this episode, and I hope you found it useful. In the next two parts, will cover connecting CloudFront to this S3 bucket, and we will wrap up with connecting our websiteinthecloud.com domain, to our CloudFront distribution.