- Episode #8 - Learning Puppet with Vagrant

- Git Workflow and Puppet Environments

- Episode #11 - Internal Git server with Gitolite

- The Road to the White House with Puppet & AWS - PuppetConf 2013

- AWS re: Invent BDT 207: Big Data and the US Presidential Campaign

- Rethinking Puppet Deployment

- Setting up a Git Commit Workflow with Puppet Enterprise

- Git - githooks Documentation

In this episode, we will be looking at a Git to Puppet deployment workflow pattern. I want to cover how puppet code gets from your workstation, onto production servers, and the bits in between.

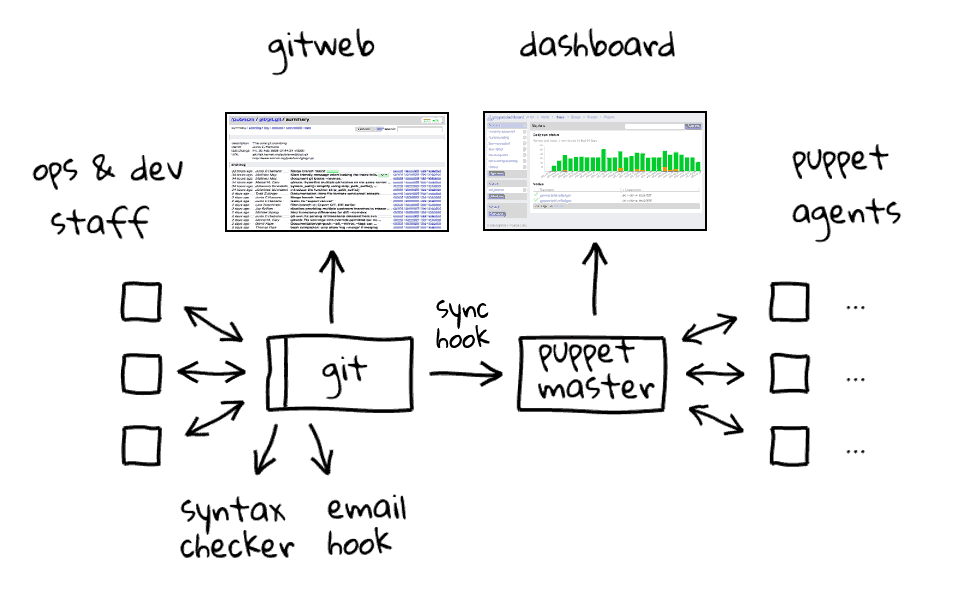

This diagram is basically the deployment workflow in a nutshell. But before we dive in, I should mention that I have no idea if this is a recommend solution, it just seems to work well for me, so I thought I would share. I am only concerned with managing the operating system and services, so I am not deploying custom made applications, which would require continuous integration systems. This deployment workflow grew very organically as we started playing around with Puppet and then went into production. It took me quite a bit of reading and then trial and error to figure this out, so I thought it would be worthwhile to share my experience, so if you need to do something similar, you have a reference point.

Let me just jump back for a minute and give you a bit of history about how this workflow came to be. First off, Puppet is a configuration management tool created by Puppet Labs which allow you to automate many sysadmin type tasks. Typically, Puppet is a client server application, where you deploy manifests and modules to a Puppet server, which describe how the configuration is supposed to look on client machines. Puppet comes in a commercial version, along with an open source version, and I am using the free open source version.

So, lets say you think Puppet sounds cool, and you start playing around with it, maybe you even watched episode #8, where I talk about learning Puppet with Vagrant. After this point, you think it would be cool to use Puppet in production. This is where the deployment workflow comes into play. The recommended best practice is to put your Puppet code into version control. So, how do you get from Version Control, to the Puppet Master, and finally the configuration onto client machines? Well, this is what I plan to talk about today.

For you to really understand how this deployment workflow functions, we should probably chat about what are the main pieces, and how changes flow through the system.

So, what are the main pieces? Well, we have our Ops and Dev staff over here, these are the guys and gals writing Puppet code on their workstations.

Next we have a central Git version control system, which holds the entire Puppet code base. This Git server is also running Gitolite for user authentication. I chat about using a central Git and Gitolite server in episode #11. This central Git sever is a bit special though, in that we have Gitweb pointing at the puppet code repository. Gitweb allows you to explore git repositories through your web browser. For example, you can easily browse the revision history, file contents, logs, view diffs, and even search repository.

But the real magic happens, by using something called Git hooks, these allow you to program actions, like sending email, or running a script when a predefined action happens to a repository. In this workflow we are using three git hooks. The first one is a pre-commit hook, this checks each commit on our repository, and only allow “in” commits with valid Puppet syntax, those with bad syntax are rejected, and the submitter is shown a detailed error message. There is actually a great video overview of this on the Puppet Labs Youtube page.

Next we have two post-receive hooks. The first one is a email hook, and it grabs the git commit details and sends it out to the Ops and Dev staff, along with a link to the Gitweb dashboard for easy viewing via a web interface. This allows everyone to be in the loop as changes go into the system and to quickly track down changes which might have broken something.

The second post-receive hook takes care of the Git to Puppet Master sync. This is actually a bash script, called from the post-receive hook, but at its heart, is a method which dumps the Puppet repo to disk, then rsyncs it over to the Puppet Master. This might not be a best practice but it seems to work really well in our use case. I would like to hear how you are using Puppet if you have something that works for you.

In this next section, we have our Puppet Master and the Puppet Dashboard. So, the code base comes over from the Git repo on each commit via the post-receive hook. Then we have our Puppet Agents checking in with the Puppet Master server every 30 minutes, looking for updates. You can track the progress of the update using the Puppet Dashboard.

I should also mention, that we have a central logging system, so everything from the clients, Puppet Master, and Git, goes into the logging system. This allows us to track errors across all machines, although it is a bit out of the scope for this discussion.

Okay, so now that you know how this workflow functions at a high level, lets push through an example puppet change. For the sake of this example, lets say we have a staff member, who want to deploy a sshd configuration tweak, which makes sure sshd is installed and configured on an end node. So, we have our staff member, lets call him Justin, and he wants to deploy this sshd configuration change out to some nodes managed by Puppet.

He worked locally on his system, maybe using Vagrant to test and verify the changes he wants to push out. He is finally happy, so he pushes the code into the central git repository, the syntax is checked by a pre-commit git hook, luckily it appears okay, so the commit is accepted. Immediately, a post-receive hook is triggered, sending an email notification out to the Ops and Dev staff with the particulars about the commit, included is a link to view the commit on Gitweb. Finally, a second post-receive hook fires, this syncs the Git repo with the Puppet Master. This all happens in a matter of seconds. Finally, puppet clients begin to check in on a rolling 30 minute cycle, and you watch the updates with the dashboard, or the central logging system.

So, that is basically it. I will likely create several more episodes going into detail about the Git hooks, Gitweb, and Puppet server and Dashboard. However, I just wanted to get this out there, in case you run into the same issues as I did. There is also lots of documentation out on the Puppet Labs website about using Git branches and Puppet environments, this is worth checking out, see the link in the episode notes below. While doing research, I also ran across ways to optimize the git to puppet deployment workflow, which looks like it might be useful, also linked to in the episode notes below.

Just to wrap up, here is what I think the good things about this pattern are. There is accountability and traceability for commits flowing into the system. Notifications keeping people in the loop, and allow for quick debugging. Even though this diagram looks complex, it is very simple, and broken into manageable pieces, which do one thing, and can be easily swapped out or improved. One thing that I would like to add to this, is the ability to do code reviews or signs offs, so that we have a second set of eyes looking at commits before flowing into production.

This workflow is also good for other things too. For example, I deploy this website using something very similar, except, rather than rsync to a puppet master, I have my website repo sync with the infrastructure running the site, and it pushes the website content out. The beauty in these git hooks, is that you can write what every you want in there, and it streamlines your deployment process.

Just before we end this episode, I found some really interesting links in regards to Puppet and AWS used during the Obama Presidential campaign. In that they went from relatively nothing to thousands of machines within the span of a year using Puppet and AWS. If you are interested in Puppet and AWS I highly recommend checking these out.